diffusive_size_factor_AI#

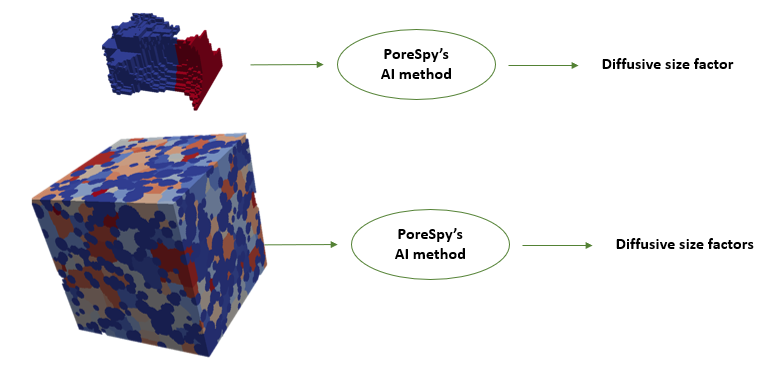

PoreSpy’s diffusive_size_factor_AI includes the steps for predicting the diffusive size factors of the conduit images decribed here. Note that the diffusive conductance of the conduits can be then calculated by multiplying the size factor by diffusivity of the phase. The function takes in the images of segmented porous medium and returns an array of diffusive size factors for all conduits in the image. Therefore, the framework can be applied to both one conduit image as well as a segmented image of porous medium.

Trained model and supplementary materials#

To use the diffusive_size_factor_AI, the trained model, and training data distribution are required. The AI model files and additional files used in this example are available here. The folder contains following files:

Trained model weights: This file includes only weights of the deep learning layers. To use this file, the Resnet50 model structure must be built first.

Trained data distribution: This file will be used in denormalizing predicted values based on normalized transform applied on training data. The denormalizing step is included in

diffusive_size_factor_AImethod.Finite difference diffusive conductance: This file is used in this example to compare the prediction results with finite difference method for segmented regions

Pair of regions: This file is used in this example to compare the prediction results with finite difference method for a pair of regions

Let’s download the tensorflow files required to run this notebook:

try:

import tensorflow as tf

except ImportError:

!pip install tensorflow

try:

import sklearn

except ImportError:

!pip install scikit-learn

import os

if not os.path.exists("sf-model-lib"):

!git clone https://github.com/PMEAL/sf-model-lib

2025-12-05 19:34:51.459840: I external/local_xla/xla/tsl/cuda/cudart_stub.cc:31] Could not find cuda drivers on your machine, GPU will not be used.

2025-12-05 19:34:51.530803: I tensorflow/core/platform/cpu_feature_guard.cc:210] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

2025-12-05 19:35:00.675699: I external/local_xla/xla/tsl/cuda/cudart_stub.cc:31] Could not find cuda drivers on your machine, GPU will not be used.

Cloning into 'sf-model-lib'...

remote: Enumerating objects: 36, done.

remote: Counting objects: 20% (1/5)

remote: Counting objects: 40% (2/5)

remote: Counting objects: 60% (3/5)

remote: Counting objects: 80% (4/5)

remote: Counting objects: 100% (5/5)

remote: Counting objects: 100% (5/5), done.

remote: Compressing objects: 20% (1/5)

remote: Compressing objects: 40% (2/5)

remote: Compressing objects: 60% (3/5)

remote: Compressing objects: 80% (4/5)

remote: Compressing objects: 100% (5/5)

remote: Compressing objects: 100% (5/5), done.

Receiving objects: 2% (1/36)

Receiving objects: 5% (2/36)

Receiving objects: 8% (3/36)

Receiving objects: 11% (4/36)

Receiving objects: 13% (5/36)

Receiving objects: 16% (6/36)

Receiving objects: 16% (6/36), 2.25 MiB | 2.18 MiB/s

Receiving objects: 16% (6/36), 4.43 MiB | 2.14 MiB/s

Receiving objects: 16% (6/36), 6.71 MiB | 2.17 MiB/s

Receiving objects: 16% (6/36), 9.02 MiB | 2.19 MiB/s

Receiving objects: 16% (6/36), 11.36 MiB | 2.19 MiB/s

Receiving objects: 16% (6/36), 13.62 MiB | 2.25 MiB/s

Receiving objects: 16% (6/36), 16.14 MiB | 2.30 MiB/s

Receiving objects: 16% (6/36), 18.77 MiB | 2.37 MiB/s

Receiving objects: 16% (6/36), 21.62 MiB | 2.48 MiB/s

Receiving objects: 16% (6/36), 24.93 MiB | 2.67 MiB/s

Receiving objects: 16% (6/36), 28.68 MiB | 2.99 MiB/s

Receiving objects: 16% (6/36), 32.78 MiB | 3.32 MiB/s

Receiving objects: 16% (6/36), 36.69 MiB | 3.60 MiB/s

Receiving objects: 16% (6/36), 40.89 MiB | 3.88 MiB/s

Receiving objects: 16% (6/36), 45.50 MiB | 4.11 MiB/s

Receiving objects: 16% (6/36), 50.20 MiB | 4.31 MiB/s

^C

Also, since the model weights have been stored in chunks, they need to be recombined first:

import importlib

h5tools = importlib.import_module("sf-model-lib.h5tools")

DIR_WEIGHTS = "sf-model-lib/diffusion"

fname_in = [f"{DIR_WEIGHTS}/model_weights_part{j}.h5" for j in [0, 1]]

h5tools.combine(fname_in, fname_out=f"{DIR_WEIGHTS}/model_weights.h5")

Note that to use diffusive_size_factor_AI, Scikit-learn and Tensorflow must be installed. Import necessary packages and the AI model:

import inspect

import os

import warnings

import h5py

import numpy as np

import scipy as sp

from matplotlib import pyplot as plt

from sklearn.metrics import r2_score

import porespy as ps

ps.visualization.set_mpl_style()

warnings.filterwarnings("ignore")

inspect.signature(ps.networks.diffusive_size_factor_AI)

model , g_train#

Import AI model and training data from the downloaded folder:

path = "./sf-model-lib/diffusion"

path_train = os.path.join(path, "g_train_original.hdf5")

path_weights = os.path.join(path, "model_weights.h5")

g_train = h5py.File(path_train, "r")["g_train"][()]

model = ps.networks.create_model()

model.load_weights(path_weights)

regions#

We can create a 3D image using PoreSpy’s poly_disperese_spheres generator and segment the image using snow_partitioning method. Note that find_conns method returns the connections in the segmented region. The order of values in conns is similar to the network extraction conns. Therefore, the region with label=1 in the segmented image is mapped to indice 0 in conns.

np.random.seed(17)

shape = [120, 120, 120]

dist = sp.stats.norm(loc=7, scale=5)

im = ps.generators.polydisperse_spheres(shape=shape, porosity=0.7, dist=dist, r_min=7)

results = ps.filters.snow_partitioning(im=im.astype(bool))

regions = results["regions"]

fig, ax = plt.subplots(1, 1, figsize=[4, 4])

ax.imshow(regions[:, :, 20], origin="lower", interpolation="none")

ax.axis(False);

throat_conns#

PoreSpy’s diffusive_size_factor_AI method takes in the segmented image, model, training ground truth values, and the conncetions of regions in the segmented image (throat conns). In this example we have created an image with voxel_size=1. For a different voxel size, the voxel_size argument needs to be passed to the method.

conns = ps.networks.find_conns(regions)

size_factors = ps.networks.diffusive_size_factor_AI(

regions, model=model, g_train=g_train, throat_conns=conns

)

Compare with finite difference#

Assuming a diffusivity of 1, the diffusive conductance of the conduits will be equal to their diffusive size factors. Now let’s compare the AI-based diffusive conductances with the conductance values calculated by finite difference method. The finite difference method results are found using the steps explained in the cited paper in the introduction.

Note: The finite difference-based diffusive size factors were calculated using PoreSpy’s size factor method diffusive_size_factor_DNS. However, due to the long runtime of the DNS function the results were saved in the example data folder and used in this example. The Following code was used to estimate the finite difference-based values using PoreSpy:

g_FD = ps.networks.diffusive_size_factor_DNS(regions, conns)

fname = os.path.join(path, "g_finite_difference120-phi7.hdf5")

g_FD = h5py.File(fname, "r")["g_finite_difference"][()]

g_AI = size_factors

max_val = np.max([g_FD, g_AI])

plt.figure(figsize=[4, 4])

plt.plot(g_FD, g_AI, "*", [0, max_val], [0, max_val], "r")

plt.xlabel("g reference")

plt.ylabel("g prediction")

r2 = r2_score(g_FD, g_AI)

print(f"The R^2 prediction accuracy is {r2:.3}")

Note on runtime: A larger part of AI_size_factors runtime is related to extracting the pairs of conduits, which is the common step required for both AI and finite difference method. Once the data is prepared, AI Prediction on the tensor takes a smaller amount of time in contrast to finite difference method, as it was shown in the cited reference paper.

Apply on one conduit#

PoreSpy’s diffusive_size_factor_AI method can take in an image of a pair of regions. Let’s predict the diffusice size factor for a pair of image using both AI and DNS methods:

fname = os.path.join(path, "pair.hdf5")

pair_in = h5py.File(fname, "r")

im_pair = pair_in["pair"][()]

conns = ps.networks.find_conns(im_pair)

sf_FD = ps.networks.diffusive_size_factor_DNS(im_pair, throat_conns=conns)

sf_AI = ps.networks.diffusive_size_factor_AI(

im_pair, model=model, g_train=g_train, throat_conns=conns

)

print(f"Diffusive size factor from FD: {sf_FD[0]:.2f}, and AI: {sf_AI[0]:.2f}")